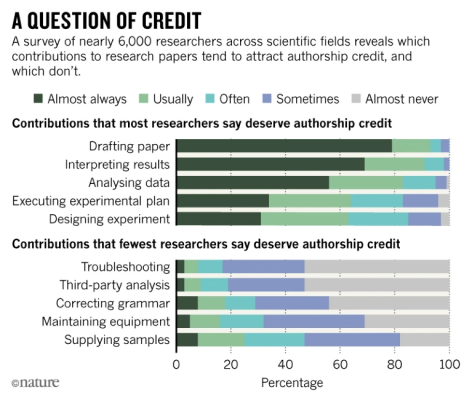

The role of the research author is a given in the scholarly world; however, how “authorship” itself is defined has historically been a more thorny issue. A 2018 survey of nearly 6,000 researchers across 21 scientific disciplines showed marked differences of opinion across scientific disciplines and even within fields. More than two-thirds of respondents agreed that they would grant the role of ‘author’ to a participant who interpreted research data or drafted the manuscript; almost half responded that they would not, or would only grant authorship to the researcher(s) who secured the initial funding.

This disparity and confusion is of little surprise given that there are no recognised standards or definition of “authorship” across all research disciplines. For example, physics papers may list thousands of authors, while lists in the natural sciences or humanities are in general much (much) shorter. While organisations such as the International Committee of Medical Journal Editors and the NIH have set out guidelines for what constitutes “authorship”, these may prove contentious even within their subfields.

The 2018 study concluded that:

“Most researchers agreed with the NIH criteria and grant authorship to individuals that draft the manuscript, analyze and interpret data, and propose ideas. However, thousands of the researchers also value supervision and contributing comments to the manuscript whereas the NIH recommends discounting these activities when attributing authorship. People value the minutiae of research beyond writing and data reduction: researchers in the humanities value it less than those in pure and applied sciences; individuals from Far East Asia and Middle East and Northern Africa value these activities more than anglophones and northern Europeans.”

There has also been a growing call over recent years to provide transparency and accountability in order to promote scientific integrity and responsibility within the research community. With the growth of Fake News and the rise of predatory journals, it is more important than ever that both the community itself and members of the press and public have tools in which to ascertain a level of trust and veracity in a given publication. As a 2017 preprint on ‘Transparency in Authors’ Contributions and Responsibilities to Promote Scientific Integrity in Scientific Publication’ succinctly puts it, “the notion of authorship implies both credit and accountability”.

Image from Nature https://www.nature.com/articles/d41586-018-05280-0

Not only do definitions of authorship differ, but the ways in which authorship is credited and attributed are often narrow in scope and risk obscuring the roles the other types of contributor in the creation of research outputs, whether those be traditional publications or other types of outputs (such as datasets, software, and protocols, or ‘softer’ outputs such as keynotes and conference panels, poster sessions, or public interest articles and media engagement).

Traditional contributor roles include things like planning, conducting, and reporting research. Non-traditional contributions may include less formally recognised roles, such as acquisition of funding, research supervision, data curation, search services through the library, software services and support, or preparation of lab reagents.

The CRediT (Contributor Roles Taxonomy) was introduced in 2014 to begin to address this issue and move from a model of authorship toward a model of contribution, and now covers 14 subtypes of contributor role. As most Elements users will be aware, we have used the CRediT taxonomy within Elements since 2018 to help simplify the capture of contributions undertaken by authors linked to a publication. And Symplectic has always allowed users of Elements to associate their accounts with publications, either as authors, translators or as other contributors, and this continues to this day.

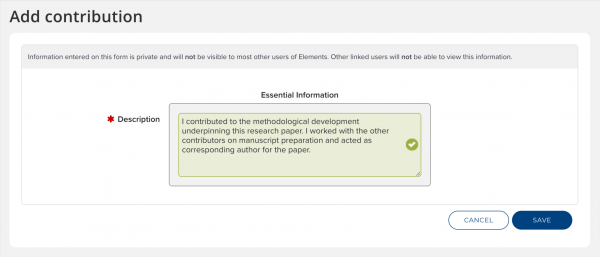

However, the adoption of the CRediT taxonomy within Elements was only a stepping stone towards allowing Elements users to better describe their personal contribution to a specific publication and so we are pleased to announce that we are now going further with the addition of ‘Annotations’ functionality within Elements, which will allow users and administrators to add explanatory, narrative statements to linked objects within the system, such as publications, grants, or non-standard outputs.

The Elements data model allows the capture of a rich and flexible set of linked metadata about objects in the system (e.g. publications, grants) and their contributors.

Object annotations will be available for publications, grants, professional activities, and teaching activities, and it will be possible to add information to one of these objects if the user has a relationship with the object. Annotations are configurable per object type, so the nature of the information captured is defined by Elements institutional administrators with a range of supporting fields, including guidance on how your users should fill out an Annotation.

We’ve leveraged Elements’ existing privacy model in a way that means the information is private to the user and to their administrator(s), which gives researchers the opportunity to build up private personal statements to address in detail how they would describe the nuance of their own contributions. Administrators can browse all Annotations on a given object, and Annotations will also be available within the reporting database to support custom reporting and specific data reuse scenarios (for example, the capture of more granular descriptions required in employment applications and/or required by tenure and promotion committees).

The key motivation that has always underpinned Elements is to better enable researchers, faculty, and their institutions, to build a truly comprehensive picture of the modern scholarly ecosystem. By making it possible for researchers to showcase non-traditional types of contribution, we can demonstrate the breadth and depth of a researcher or faculty member’s day-to-day work; data that, once captured on an ongoing basis, becomes invaluable when it comes to career-building activities such as Promotion & Tenure, and research-promoting activities such as press and public engagement.

At the end of the day, what this means is that it’s now even easier for your users to get credit where credit is due.

You can find out more about how to use the new Annotations functionality as part of Elements 6.10 in this short, 15 minute introduction and demo.